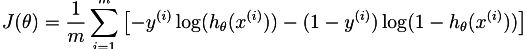

I just started taking Andrew Ng's course on Machine Learning on Coursera. The topic of the third week is logistic regression, so I am trying to implement the following cost function.

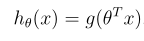

The hypothesis is defined as:

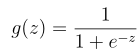

where g is the sigmoid function:

This is how my function looks at the moment:

function [J, grad] = costFunction(theta, X, y)

m = length(y); % number of training examples

S = 0;

J = 0;

for i=1:m

Yi = y(i);

Xi = X(i,:);

H = sigmoid(transpose(theta).*Xi);

S = S + ((-Yi)*log(H)-((1-Yi)*log(1-H)));

end

J = S/m;

end

Given the following values

X = [magic(3) ; magic(3)];

y = [1 0 1 0 1 0]';

[j g] = costFunction([0 1 0]', X, y)

j returns 0.6931 2.6067 0.6931 even though the result should be j = 2.6067. I am assuming that there is a problem with Xi, but I just can't see the error.

I would be very thankful if someone could point me to the right direction.